The question of security and cloud computing is one of the very well argued discussed and debated topic. It’s also one of the very common question I get in my sessions.

What ever said and done I personally believe that talking about security regardless of in the cloud or not it has 2 major characteristics.

- Security is a policy based feature

- Security is a shared responsibility

Ok, leaving the arguments of security, let’s jump into the Azure blob storage data security.

What would be the concerns of data security in Azure Blob storage

- How much secure the Azure data centers are ?

- What is the security for the data in transit ?

- Does Microsoft look or access my data stored in Azure Blob ?

- What are the policies should I follow in order to store data securely in Azure Blob ?

Let’s discuss each point one by one

How much secure the Azure data centers are ?

If you’re concerned that Azure data centers aren’t secured enough that physical security breach easily be possible, then you are not going to use the Azure in the first place. And there are tons of articles and videos in the Internet explaining the data center security features of Microsoft Azure.

What is the security for the data in transit ?

All the communications with the Azure and also within different data centers in Azure happen via https so it’s secured via TLS. There are some services which can be accessed (blob service included) with the http but by default this is turned off and it is not recommended to turn it on unless specifically required.

Does Microsoft look or access my data stored in Azure Blob ?

The direct answer from Microsoft is No, and indeed it’s true to my knowledge. But as per in the agreement Microsoft might collect the telemetry data of your blob storage in terms of billing, usage patterns and service improvement parameters.

What are the policies should I follow in order to store data securely in Azure Blob ?

All your cooperate policies that you apply on premise and also additional policies that you have defined in your organization to store the data outside the cooperate firewall should be applied when using Azure Blob storage. But this is very unique and different to each company.

With all that information Why do you need to encrypt the data at rest from any public cloud provider ?

This is a question I ask from the customers when they request me to encrypt the data. Of course there’re several reasons mostly it is due to the cooperate policies which state any data at rest outside the cooperate firewall should be encrypted and also there’re cooperate policies that define the data should be in a specific political and geographic boundary. This is another concern in architecting the applications and selecting appropriate redundancy policies.

Other than the above reasons some customer have complained that we want to protect the data from our public cloud service provider, and prevent them reading the data. This is acceptable for some extent but again this raises plenty of other arguments like how and where to keep the encryption keys and how to secure them.

Encrypting data at rest in Azure Blob Storage

Azure blob storage does not provide any inbuilt mechanisms to encrypt the data at rest. We have to handle this in our application.

First selection of the encryption is very important. Since we’re talking about encrypting the data at rest symmetric encryption serves the purpose well, we do not need to put asymmetric encryption and overwhelm the situation.

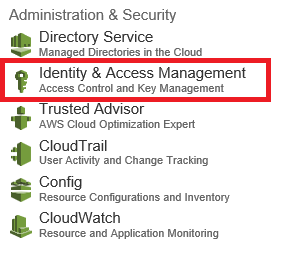

Second where to keep the private keys – In most of the application design scenarios keeping the private keys in a database either encrypted or unencrypted format is a common practice. If you’re encrypting your private keys then you should have a master key. You can design your own key management policy for you application or use Azure Key Vault for the key management which is still in preview. One of the concerns I’ve been researching is having an algorithm as a master key which can create master keys for given parameters and handles master key expiration policies this will help us from the problems of persisting keys. (will write another separate post about this)

Third we should be aware when and how to apply the encryption – As the best practice always encrypt and decrypt the memory streams of your blobs. So you ensure that data is encrypted before hitting the disk.

In a web application encryption and decryption happens in the web server. The following diagram explains a scenario where there’s a web app and a blob storage in place and how encryption/decryption happens for the data at rest.

Azure encryption extensions is a great library (https://github.com/stefangordon/azure-encryption-extensions)

Nuget – Install-Package AzureEncryptionExtensions

Performing the encryption and decryption in the stream helps to protect the data before it hits the disk. See the code below.

You can access the sample code with Web API service for upload and download content to Azure blob storage with symmetric encryption from my git repo

https://github.com/thuru/azurestorageencryptionsample