Disclaimer: This is an opinionated post. The views and platitudes are solely based on my own experience and observations.

AAG – AWS, Azure & GCP

AWS, Azure and GCP are respectively from Amazon, Microsoft, and Google. These companies have different roots, values strengths and weaknesses. Each of them has a different DNA, which influences their cloud services in diverse ways. This article shows a completely different perspective about how the different DNAs of these organizations have been shaping their cloud market.

AWS – The Retail DNA

AWS has the retail DNA from the roots of Amazon’s e-commerce business culture. Few notable key traits of the retail DNA are shipping fast to be the first, more focus on volume than margins and packaging under own brands known as private labeling.

Amazon launched AWS in 2006. Early adapters and open-source folks went ahead with AWS, this includes many current successful startups who were catching up during 2008-2013. Although Microsoft launched Azure later that period in 2011, it was less matured, and Microsoft did not have a good repo with open-source communities then. Being the first to market without a serious competition, AWS took the whole advantage of the situation during that period.

AWS follows a continuous innovation cycle and keeps on releasing new services although those services are either less popular or only useful to a smaller set of customers. AWS does this to be the first in the market, not worrying about the bottom-line.

Another interesting trait of the retail DNA is Private Labeling. Private labeling is a business technique used by retail players to package common goods from suppliers under their own labels with some value additions. AWS uses this technique very cleverly. AWS has an inherent weakness of not having any established software or operating systems of its own. This does not play well for AWS when it comes to cloud lock-in or giving generous discounts to the customers on software licenses. However, using private labeling AWS has been successfully battling this challenge by creating its own services. Few examples are Aurora DB which is a private label of MySQL/Postgres and Redshift is another successful example.

Azure – The Modern Enterprise DNA

Azure has the DNA of a modern enterprise. Modern Enterprise DNA has old traits like bottom line focus, partner ecosystem and speaking the corporate lingo combined with modern traits such as innovation, openness, and platform strategy.

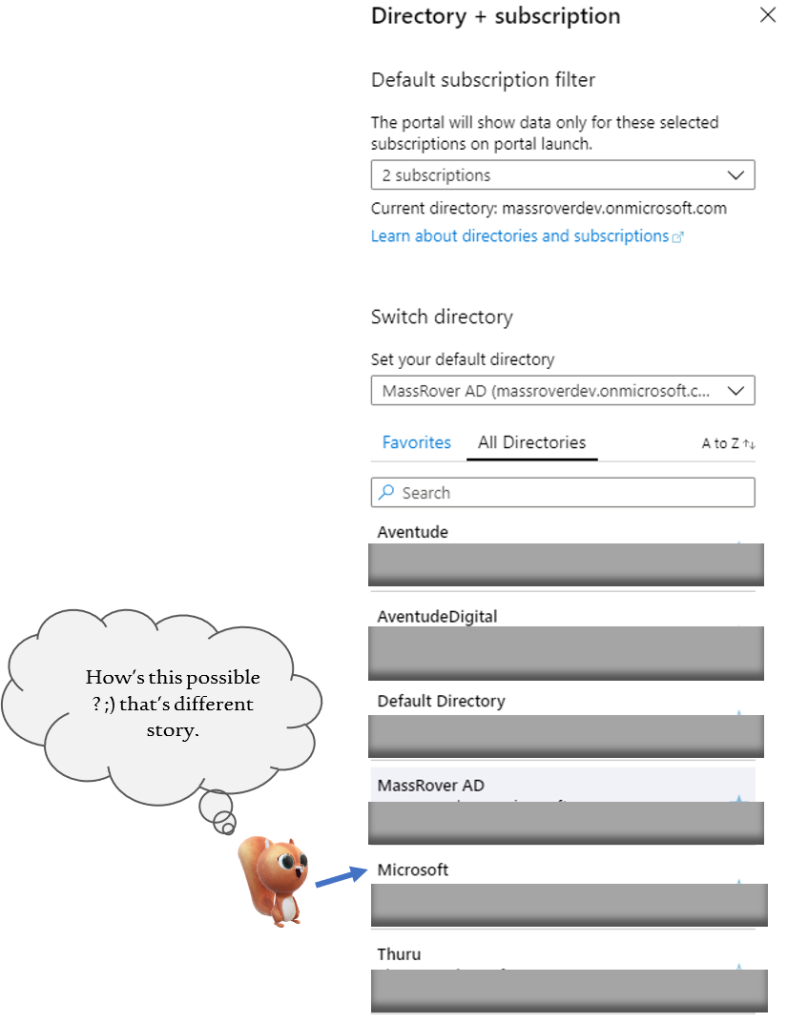

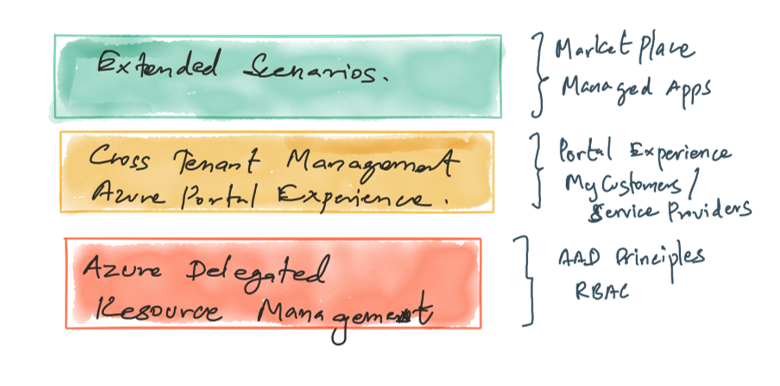

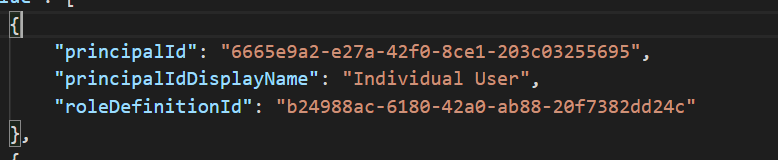

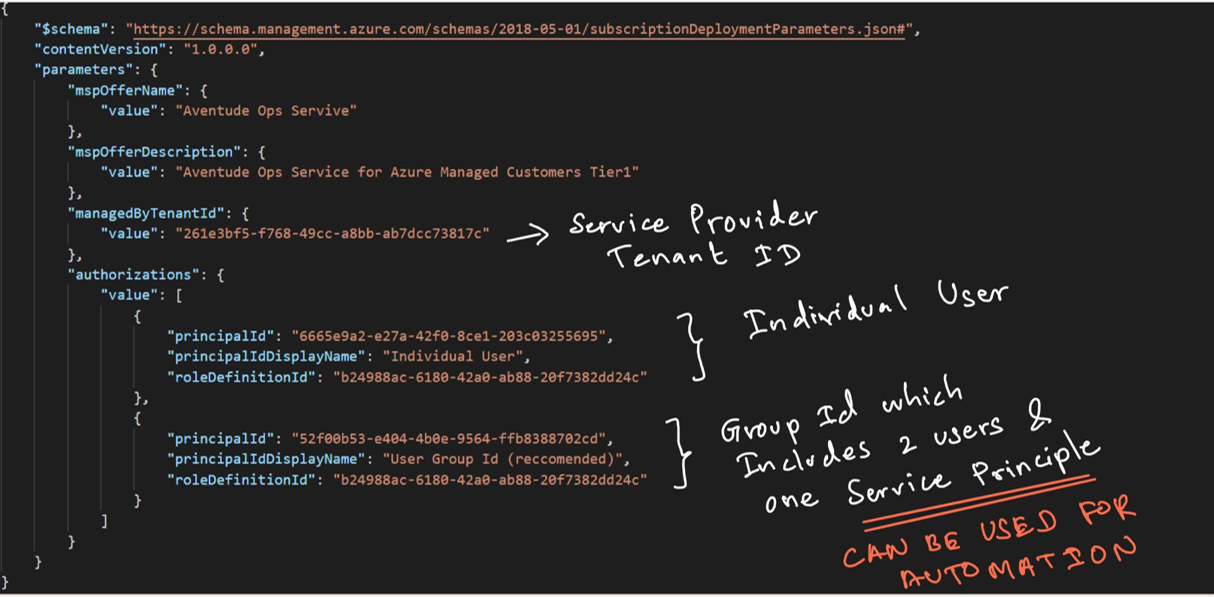

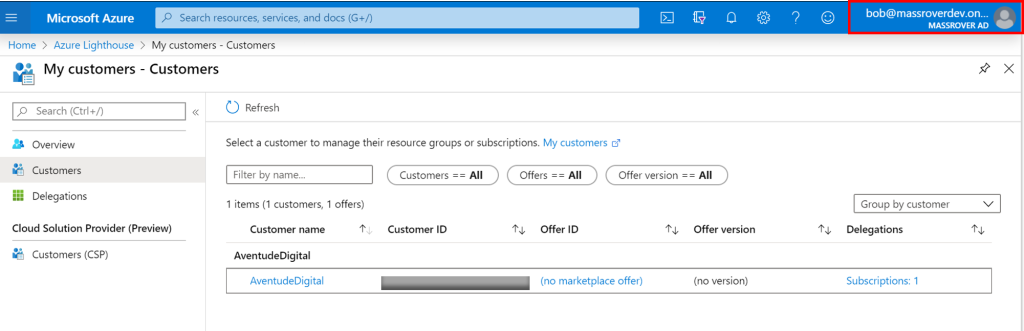

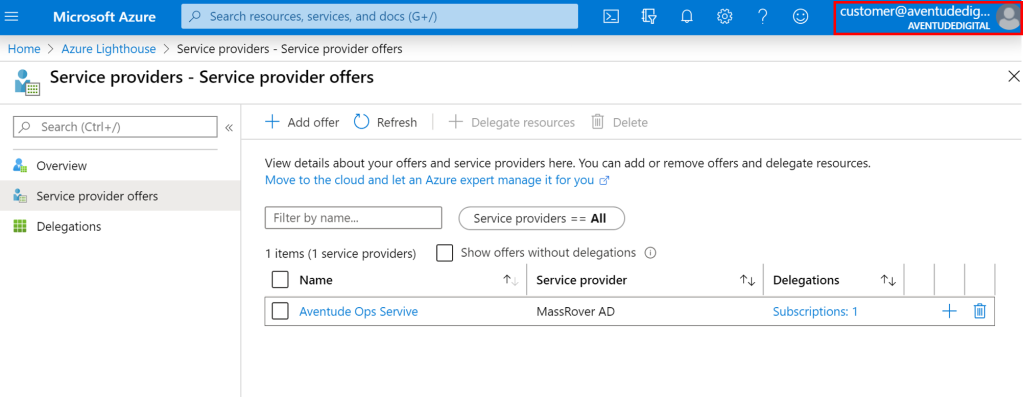

Azure is not a laggard when it comes to innovations, Azure has its own share of innovative services with more focus on developer productivity and enterprise adaption. Azure Active Directory, Azure Cosmos Database, Azure Functions and Azure Lighthouse are few of those several enterprise-focused innovative services.

Generally, Azure targets its innovations at stable markets where they anticipate greater adaption, they do not invest much on niche market areas just to appear cool. This may be because of the traditional bottom-line focused business orientation. Because of this trait, sometimes we can notice that Azure terminates few services at their beta stage without releasing in General Availability, thus focusing on stable high reach bottom-line focused innovations over diversity of the service portfolio.

Having a rich partner ecosystem is another key strength of Microsoft. This has given an unbeatable position for Microsoft in hybrid cloud market with its Azure Stack suite. Azure Stack is a portfolio of products that extends the Azure capabilities to any environment. It has three products Azure Stack Edge, Azure Stack HCI and Azure Stack Hub. In other terms, Azure Stack is Azure in different versions, loaded in different hardware and bundled together for customers having different hybrid cloud demands. This is only possible by Microsoft because of its long-standing partner ecosystem and OEM partner network.

GCP – Internet Services DNA

GCP has the DNA of an Internet services company; in fact, there is no surprise as it is coming from Google. Google leads the Internet based consumer services; we all use Google services in our day-to-day life. Internet services DNA prioritizes individual services over a whole platform, and it prioritizes B2C over B2B.

GCP is the third largest cloud provider by revenue, but the gap between GCP and Azure is big. Also, GCP has a serious competition from Ali Cloud.

GCP has all the required foundational building blocks of a modern cloud, but it lacks the rich portfolio of services what AWS or Azure has. GCP tries to sell the same thing under different packaging, one example – API management service is listed as ‘New Business Channels using APIs’ and ‘Unlocking Legacy Applications using APIs’. Those are two different use cases of the same product, but not two different services. Though some may debate, this is an approach to attract customers with two different needs, other cloud providers do not do the same trick under their list of products.

Google is a successful Internet services company; Google should have been the leader in cloud computing. Ironically, it did not happen because Google did not believe in enterprise businesses. They were so focused on Internet based services and generating revenue by content advertisements. Individual users were more important than big businesses. When they realized big corporates are the big customers for the cloud business it was bit too late, and they had to bring the leadership from outside to get that thinking.

Google’s Internet service DNA has made GCP fragmented, the perception about GCP as one solid platform is vastly missing. Most of us use GCP services without much attention to the whole platform. We use Google Maps in applications, Firebase has become a necessity for mobile development, we use Google search APIs, but we see them as individual services, not as single cloud platform. The single platform thinking is essential to win the enterprise customers, not having such perception is a major downside of GCP.

However, it is not all bad for GCP, amongst these odds Google seems happy with what they are doing. They are showing upward trend in the revenue, and recently won few notable enterprise customers.