Preparing the video for the HTTP Adaptive Streaming using AMS

I assume you have read the Part 1 of this series and you have the understanding about the HTTP Adaptive Streaming and why we need to support it in HTML 5.

HTTP adaptive streaming allows the browsers to request video fragments in varying quality based on the varying bandwidth. In order to serve video fragments in varying qualities, the media should be encoded in different qualities and kept ready to be streamed. For example if you have a video in 4K quality, you have to encode it in different qualities like 1080p, 720p and 480p likewise. This eventually will produce individual files in different qualities.

Note: Typically upscale encoding process do not produce quality videos, meaning that if your original video is in 2K quality and encoding it in lower qualities would produce best outputs but encoding it in the 4K quality would not produce clear 4K most of the times.

So in the process of encoding the video files in different qualities, the first step is uploading the source file. This is known as Ingestion in the media work flow.

Assuming that you have AMS up and running in you Azure account, the following code snippet would upload your video file to the AMS. When creating the AMS you have to create a new / associate an existing storage account with that. This storage account will be used to store the source files and the encoding output files (these files are known as assets).

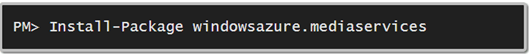

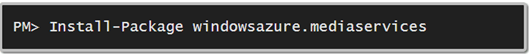

First install the following AMS NuGet packages

var mediaServicesAccountName = “<your AMS account name>”;

var mediaServicesKey = “<AMS key>”;

var credentials = new

MediaServicesCredentials(mediaServicesAccountName, mediaServicesKey);

var context = new

CloudMediaContext(credentials);

var ingestAsset = context.Assets.CreateFromFile(“minions.mp4”, AssetCreationOptions.None);

After the upload we should encode the file with one of the available HTTP Adaptive Streaming encoding types. The encoding job will produce different files in different qualities for the same source. In the date of this writing AMS supports 1080p as the maximum ABR encoding quality.

Following lines of code submit the encoding job to the AMS Encoder. You can scale the number of encoders and the type based on your demand like any other services in Azure. When the encoding is completed AMS will put the output files in the storage account under the specified name (adaptive minions MP4).

var job = context.Jobs.CreateWithSingleTask(MediaProcessorNames.AzureMediaEncoder,

MediaEncoderTaskPresetStrings.H264AdaptiveBitrateMP4Set1080p, ingestAsset, “adaptive minions MP4”,

AssetCreationOptions.None);

job.Submit();

job = job.StartExecutionProgressTask(p =>

{

Console.WriteLine(p.GetOverallProgress());

},

CancellationToken.None).Result;

Once the job is finished, we can retrieve the encoded asset, apply polices to it and create locators. Locators could have conditions on the access permission and the availability. When we create locators, assets will be published.

var encodedAsset = job.OutputMediaAssets.First();

var policy = context.AssetDeliveryPolicies.Create(“Minion Policy”,

AssetDeliveryPolicyType.NoDynamicEncryption, AssetDeliveryProtocol.Dash, null);

encodedAsset.DeliveryPolicies.Add(policy);

context.Locators.Create(LocatorType.OnDemandOrigin, encodedAsset, AccessPermissions.Read, TimeSpan.FromDays(7));

var dashUri = encodedAsset.GetMpegDashUri();

We can retrieve the URLs for different streaming protocols like HLS, Smooth and DASH. Since our topic of discussion focuses on delivering HTTP adaptive streaming over HTML 5, we will focus on constructing the DASH URI for the asset.

This is the manifest URI, which should be used in HTTP adaptive streaming playback.

In the Part 1 of this series, I have explained what HTML 5 video tag does and the browser is not aware of requesting varying fragments based on varying bandwidths. In order to do this we need a browser which supports DASH.

There’re several implementations of the DASH player, we will be using Azure Media Player for the playback since it has fallbacks for the other technologies if DASH isn’t supported.

Note: AMS should be configured with at least one streaming end point in order to enable the HTTP Adaptive Streaming.