Azure Active Directory is a cloud identity management solution, but not limited to cloud identity alone. In this post let’s discuss about how AAD can be used in designing multi-tenant applications in cloud. As usual consider that MassRover is an ISV.

MassRover got this great idea of developing a document management application named ‘Minion Docs‘ for enterprises, they simply developed the application for Intranet using Windows Authentication. VTech was the first customer of Minion Docs. MassRover installed the application on premise (in the VTech data centers).

After a while VTech started complaining that the users want to access it outside the organization in a more secure way using different devices, and VTech also proposed that they are planning to move to Azure.

MassRover decided to move the application to the cloud in order to sustain the customers, also they realized that moving to the cloud would open the opportunity to cater multiple clients and they can introduce new business models.

Creating Multi-Tenant Applications

The Intranet story I explained is very common in the enterprises.

All the organizations have the burning requirements of handling the modern application demands like mobility, Single Sign On and BYOD without compromising existing infrastructure and the investments.

MassRover team decided to move the application to the Azure in order to provide solutions for those problems and leverage the benefits of the cloud.

First Mass Rover got an Azure subscription and integrated Minion Docs as a multi-tenant application in their AAD. As an existing Intranet application this requires minimum rewrite with more configuration.

The below setup window is the out of the box ASP.NET Azure Active Directory multi-tenant template, you see in Visual Studio.

Registering an application in AAD as a multi-tenant application allows other AAD administrators to sign up and start using our application. Considering the fact that Minion Docs is an AAD application there 2 primary ways that VTech can use Minion Docs.

- Sync Local AD with AAD along with passwords – allows users to single sign on using their Active Directory credentials even though there’s no live connection between local AD and AAD.

- Federate the authentication to local AD – users can use the same Active Directory credentials but the authentication takes place in local AD.

The only significant different between the above two methods is, where the authentication takes place; in the AAD or in federated local AD.

Local AD synced with Azure Active Directory with passwords

VTech IT decides to sync their local AD with their AAD along with the passwords. And VTech AD administrator signs up for the Minion Docs and allows the permissions (read / write) to Minion Docs.

What happens here?

- MassRover created and registered Minion Docs as a multi-tenant Azure Active Directory application in their Azure Active Directory.

- VTech has their local AD which is the domain controller which had been used in the Minion Docs Intranet application.

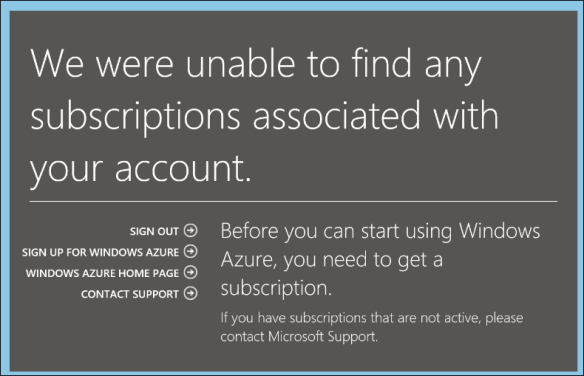

- VTech purchases an Azure Subscription and they sync their local AD to their Azure Active Directory along with the passwords.

- VTech Azure Active Directory admin signs up for the Minion Docs application, during this process VTech admin also grants the permissions to the Minion Docs.

- After the sign up Minion Docs will be displayed under the ‘Applications my company uses’ category in the VTech’s AAD.

- Now a user named ‘tom’ can sign in to the Minion Docs application with the same local AD credentials.

Sign in Work Flow

Few things to note

- Minion Docs does not involve in the authentication process.

- Minion Docs gets the AAD token based on the permission granted to Minion Docs application by the VTech AAD admin.

- Minion docs can request additional claims of the user using the token and if they are allowed Minion Docs will get them.

- Authorization within the application is handled by the Minion Docs.

Local AD is federated using ADFS

This the second use case where the local AD is synced with the AAD but VTech decides to federate the local Active Directory. In order to do this, first VTech should enable ADFS and configure it. ADFS doesn’t allow any claims to be retrieved by default, so VTech admin should specify the claims as well.

Federated Sign in Work Flow

In the federated scenario the authentication happens in the local AD.

As an ISV Minion Docs is free from, whether the authentication happens in the customer’s AAD or in their local AD.

Tenant Customization

Anyone with the right AAD admin rights can sign up to the Minion Docs, but this is not the desired behavior.

The better approach would be during the first sign up we can notify the Minion Doc administrators with the tenant details and they can decide or this could be automated in subscription scenarios.

A simple example, consider Voodo is another customer who wants to use Minion Docs. The Voodo admin signs up and before adding Voodo as an approved tenant in the Minion Docs database they have to complete a payment. Once the payment is done Voodo will be added to the database. This is very simple and very easy to implement.