Introduction

At the time of this writing Azure Key Vault is in preview. Azure Key Vault is a secure store solution for storing string based confidential information.

The reason I’ve mentioned that the string based confidential information is that you can store a key used for encrypting a file, but you cannot store the encrypted file itself as a file object; because some people have the confusion what could be stored inside the Key Vault.

Azure Key Vault – http://azure.microsoft.com/en-gb/services/key-vault/

Key Vault store 2 types of information

- Keys

- Secrets

Secrets– This could be any sequence of byte under 10 KB. Secrets can be retrieved back from the vault. Very much suitable for retrievable sensitive information like connection strings, passwords and ect. From the design point of view, we can either retrieve the keys every time we need or retrieve it once and store in the cache.

Keys – Keys could be imported to the vault from your existing vaults, also if your organization has Hardware Security Modules (HSM) you can directly transfer them to HSM based Azure Key Vault. Keys cannot be retrieved from the vault. For example if you store the key of a symmetric encryption which encrypts the files, you should send the files to vault ask the vault to encrypt / decrypt the data. Since keys cannot be retrieved from the vault this provides a higher isolation.

Keys could be stored in 2 different ways in the vault

- Software protected keys

- Hardware protected keys

Software Protected Keys – This is available in the standard tier of the vault. Compared to the Hardware protection this is theoretically less secured.

Hardware Protected Keys – HSMs are used to add premium hardware based circuitry secure storage for the keys. The most advanced key vault system available.

Provisioning Azure Key Vault

As Azure Key Vault is used to store sensitive information the authentication to the Azure Key Vault should happen via Azure AD. Let me explain it in simple steps.

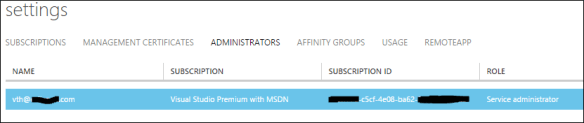

- First a subscription administrator (either the service admin or co-admin) will create a Azure Key Vault using PowerShell.

- Then the admin registers an Azure AD application and generate the App Id and the App Secret Key.

- Admin grants the permission (trust) to the App to access the Key Vault using PowerShell.

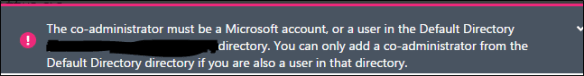

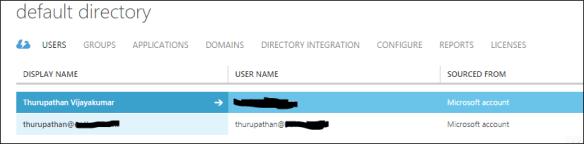

- The subscription where the Vault is created should be attached to the Azure AD where the accessing app in the above step is created.

- This ensures that accessing app is an object of the Azure AD on which the subscription where the Vault is created is attached to.

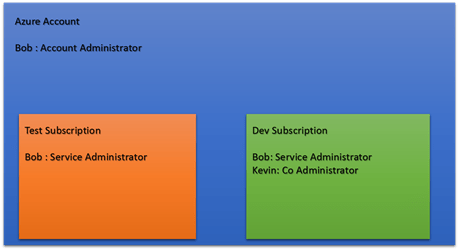

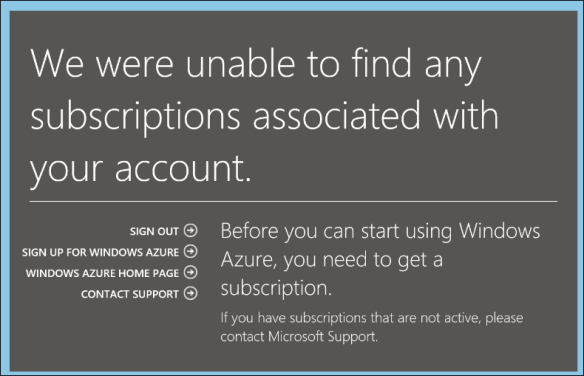

Sometimes the 4th and 5th points might be bit confusing and you might face them especially when dealing with the multiple Azure subscriptions. See the below image for a clear picture.

Assume that you have two subscriptions in your Azure Account, if you create the Vault in the Development subscription the app which can authenticate to the Vault should be in the Default AD. If you want to have the app in the Development AD you have to change the directory of the Development subscription.

Usage

Assume MassRover is a fictional multi tenant application on Azure.

ISV owns the Azure Key Vault

Scenario 1 (using secrets to for the encryption) – MassRover allows users to upload documents and it promises high confidential data security to its tenants. So it should encrypt the data at rest. MassRover uses it’s own Azure Key Vault to store the secrets (which are the encryption keys). A Trust has been setup between the Azure Key Vault and MassRover AD client application. MassRover Web App authenticates to the Azure Key Vault retrieves the secrets and performs the encryption / decryption of the data.

Scenario 2 (using keys) – MassRover Azure Key Vault stores the keys which cannot be retrieved out of the Vault. So the web app authenticate itself with the Vault and sends the data to the Vault to perform the encryption of decryption. This scenario has higher latency than scenario 1.

Tenant owns the Azure Key Vault

Tenants can own their Key Vault and give access to MassRover by sharing the the authorized application Id and application secret. This is an added benefit if the tenants worry about ISVs keeping the keys in their subscription and administrative boundary. Tenant maintained Key Vaults give additional policy based security for sure but latency is high since data transfer has to happen across different wires. (this could be solved to certain extent if the tenant provisions the Key Vault in same region).

Tenant maintained Key Vault also has 2 scenarios explained above, as either to go with the secrets or go with the keys.

Scenario 3 (using secrets)

Scenario 4 (using keys)

Useful links

Azure Key Vault NuGet packages (at the time of this writing they in pre release stage : http://www.nuget.org/packages/Microsoft.Azure.KeyVault/

PowerShell for provisioning Azure Key Vault and .NET code sample : https://github.com/thuru/AzureKeyVaultSample

Channel 09 – http://channel9.msdn.com/Shows/Cloud+Cover/Episode-169-Azure-Key-Vault-with-Sumedh-Barde